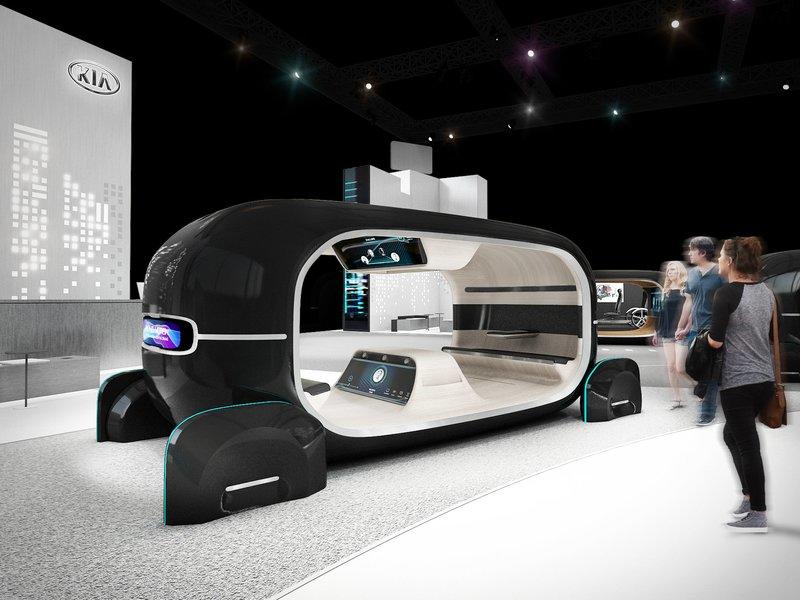

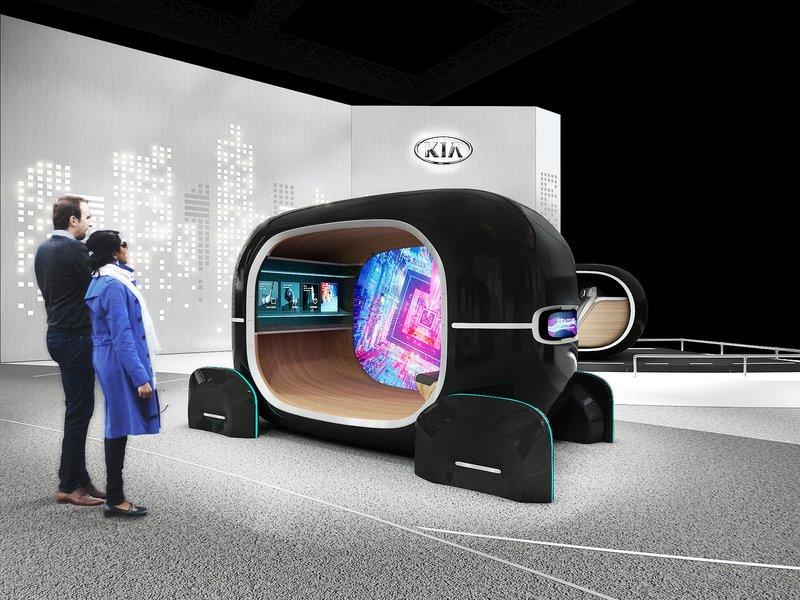

Kia displayed how it views the interactive life inside autonomous vehicles (AV) at CES 2019. The company has partnered with the Massachusetts Institute of Technology (MIT) Media Lab’s Affective Computing Group for its Real-time Emotion Adaptive Driving (R.E.A.D.) system, which will be used on its “SEED Car” concept with the company’s V-Touch technology.

Related: Hyundai Elevate- A Car That Can Walk on 4 Legs

Kia’s R.E.A.D. system uses AI to recognize drivers’ and passengers’ physiological emotions based on facial expressions, electrodermal activity on the steering wheel, as well as heart rate, and then responding in a hopefully helpful way. CES visitors could see how the sensory controls react in real-time to their changing emotional state.

According to James Bell, Director of Corporate Communications, at Kia Motors America:

“The R.E.A.D. System analyzes a driver’s emotional state in real-time through bio-signal recognition.”

The data gathered helps the AI change the interior environment, altering conditions to appease the five senses inside the cabin. Think of it as a way to make a driver experience a positive one by enhancing the cabin environment.

Related: Hyundai Develops World’s First Multi-collision Airbag System

R.E.A.D. uses a music-response vibration system in the seats, like some game simulation chairs where the player feels the control reactions. This sensory-based signal-processing technology adapts seat vibrations according to sound frequencies of the music played. The best part is how the seats can be set to massage mode. However Kia says the system is designed to enhance safety by providing haptic warnings from the vehicle’s advanced driver-assist systems.

The SEED Car concept is a 100km pedal-electric hybrid vehicle which uses pedal input from the driver with a high degree of electric power assistance to make it effortless. For longer trips, Kia designed the BIRD Car, an AV shuttle with greater range. Both of them use R.E.A.D system.

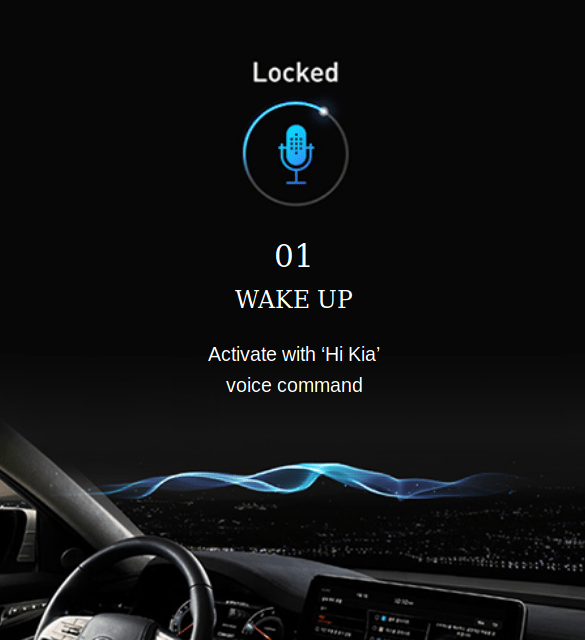

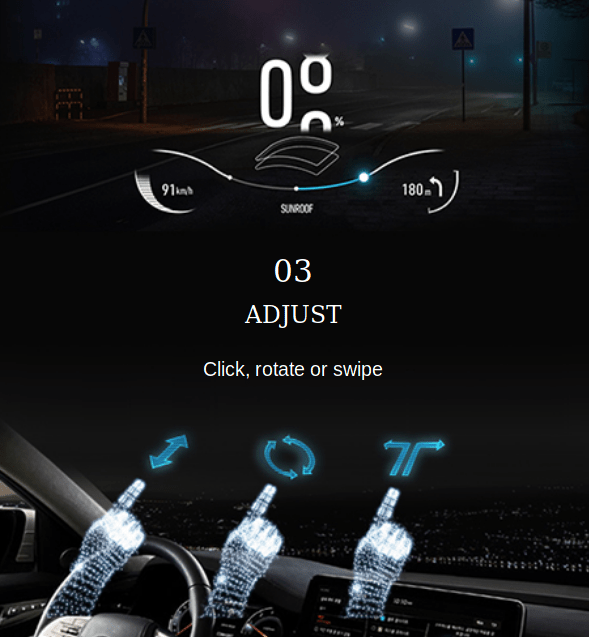

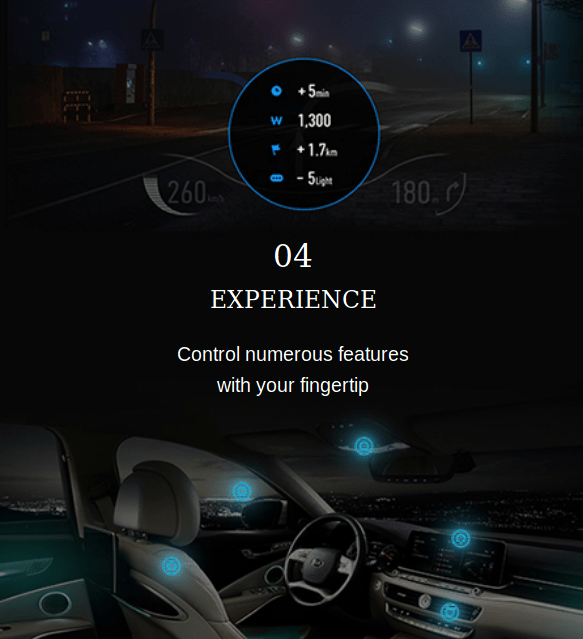

Lastly, the V-Touch is Kia’s virtual touch-type gesture control technology. It uses a 3D camera monitoring the drivers’ and passengers’ eyes, as well as fingertips. Anyone can manage in-car features through a heads-up display. Hand and finger gestures are something many mobility makers are working on as an intuitive way to interact with the cabin environment, such as lighting, heating, ventilation and air-conditioning (HVAC), and the entertainment system. Ultimately, this translates to fewer buttons, fewer touch screens, and more open spaces.

Related: Nissan Unveils Brain-to-Vehicle Technology to Prevent Accidents

Kia uses its AI technology for deep learning of its passengers and drivers. It establishes a user behavior baseline and identifies certain patterns and trends. It can then customize the cabin to become a better-adapted environment.

A computer animation professional with over 23 years of industry experience having served in leading organizations, TV channels & production facilities in Pakistan. An avid car enthusiast and petrolhead with an affection to deliver quality content to help shape opinions. Formerly written for PakWheels as well as major publications including Dawn. Founder of CarSpiritPK.com